Event Related Potentials

Goals:

- Readers should know which ERP to use in which situation

- Be able to code an algorithm that will extract P300 ERP features for a speller type program

Terms/Topics to be defined/covered ahead of this article:

- Basics of Machine Learning (features, classifiers, unsupervised, supervised)

Feature Extraction

What is feature extraction

Raw neural data, recorded from an EEG or other BCI device, includes a broad set of information about brain activity and some noise from external interference. Even with minimal noise, the complexity of the data in its raw form can be difficult to interpret. Feature extraction is the process of identifying and extracting meaningful information from raw neural data. This reduces the noise as well as the volume of data, making it easier to identify patterns and improves the accuracy of the BCI.

Some feature extraction methods are unsupervised. They do not use example data labelled with features to learn from. They simply extract the most significant information on their own by discarding similarities and focusing on the differences in the data. These methods include Principal Component Analysis (PCA), Wavelet transform, and more. Other methods like Common Spatial Patterns (CSP) are supervised. They require a set of labelled data to determine the specific patterns you would like to extract.

Which features to extract

The choice of feature extraction methods will depend on several design choices including the type of brain processes being captured. In this document, we will focus on BCIs based on event-related potentials (ERPs).

The feature extraction step for ERP-based BCIs is fairly simple. The first step is to extract epochs. An epoch is a chunk of an EEG signal with a specified length and synchronised with an event (i.e. the presentation of a stimulus). Statistics of these epochs are then computed to extract features. For example, one may simply compute the mean voltage recorded at each electrode site during the epoch. This feature is usually sufficient for identifying ERPs.

Event-Related Potential

What is an event-related potential

An event-related potential, or ERP, is the electrophysiological response in the brain to a specific motor or cognitive event (e.g. a stimulus). This stimulus can be almost anything: a flashing light, a surprising sound, a blinking eyeld etc. In all cases, a bci using ERP’s will try to isolate and identify these small, event-related responses.

How to record an ERP with EEG

A scalp-recorded EEG records the joint activity of millions of neurons making it almost impossible to isolate each and every signal that relates to an ERP. The best we can do is look at the overall voltage changes. We call a change that appears to reflect a specific psychological process an ERP component.

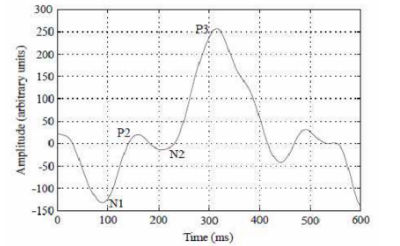

An ERP waveform (Figure 1) contains the overall voltage changes over time starting at the onset of the stimulus. It contains several wave peaks, which could be relevant ERP components. Each positive (P) and negative (N) peak is followed by a number (i.e. N1, P2, N2, P3, etc.) representing the distance from the stimulus onset (0 ms). This is the most common naming convention.

Figure 1. ERP waveform with a typical P3 wave. From (Hoffman et al., 2008)

How to use ERP’s

BCIs using ERPs are easier to use as they require very little training time. They can be used to monitor attention and fatigue by studying how the brain reacts to certain external events. With visual stimuli, an ERP-based BCI can be used as an input device with a relatively quick reaction time compared to other BCI’s. For more information see: An Introduction to the Event-Related Potential Technique By Steven J. Luck

How to generate an ERP

Any sensory, motor or cognitive event can trigger an ERP, but most BCI’s using ERPs are sensory-based and use either visual or auditory stimuli. Both stimuli are more easily generated by a computer than other sensory input. Also, the visual cortex has been studied for decades, allowing us to better interpret visual stimuli on the brain. Sensorimotor stimuli are also used in some BCIs while olfactory and gustatory stimuli are rarely used.

The P3 family of ERP’s

One of the easiest approaches to make the brain generate an ERP is by developing experiments following the oddball paradigm. In these experiments, participants are shown a repetitive sequence of standard stimuli (e.g. pictures of cars) interleaved, rarely by a deviant stimulus (e.g. a picture of a face). Our brain reacts to these “odd” stimuli by generating a large P3 or (P300) ERP component. The P3 wave peak occurs around 300-600 ms after the odd stimuli is presented. It is also called the P300 as when it was discovered it was believed the peak alsways occurred as 300 ms.

This document will mainly focus on the P3 wave but there are other important ERP components used in BCI research, including the N2pc (related to the attention), N4 or N400 (related to language) and the error related negativity (ERN). For further details on those compenents see: An Introduction to the Event-Related Potential Technique By Steven J. Luck

The characteristics or the P3 make it very suitable for devloping BCIs. First of all, the P3 response is easy to measure with EEG, hence being suitable for non-invasive BCIs. Moreover, a P3-based BCI requires less than 10 minutes of training and it works with the majority of subjects, including those with severe neurological diseases.

History of P3-based BCIs

The P3 is a ERP component reported by Sutton in 1967 but the first P3-based BCI was developed by Farwell and Donchin in 1988 to provide a speller (virtual keyboard) for paralysed people. Users would look at a matrix of letters on a screen and were asked to focuson the letter they wanted to input. The rows and columns of letters would flash randomly and whenever the letter of interest was highlighted it would appear to the brain as an “oddball” stimulus. the stimulus would be detected as a P3 ERP component and the BCI would output the corresponding letter.

After this first P3-based BCI was introduced, not much attention was given to this technology. According to Donchin et al. (2000) there were no P3-based BCI peer-reviewed papers from 1988 to 2000. The next five years saw only a modest increase in P3-based BCI articles, which often relies on offline analyses, such as analyzing other groups’ data from the 2003 BCI Data Analysis Competition.

In the last decade, P3-based BCIs have emerged as one of the main BCI categories due to several appealing features. It’s straightforward to use, relatively fast, effective for most sers, and requires almost no training. P3-based BCIs can be used for a wide range of applications including controlling virtual keyboards, controlling a mouse, supporting decision-making, and so on.

How to record, process, and classify an ERP

This section presents a hands-on tutorial on how to extract ERPs from EEG data. We use the Python MNE Library, which has good tools for eeg and MEG data analysis. In addition, this library includes EEG datasets which can be downloaded for free.

MNE Sample Dataset

We use the sample dataset provided by MNE and recorded in an experiment where checkerboard patterns were presented to the subject into the left and right visual field, interspersed by tones to the left or right ear according to the following settings: —The interval between the stimuli was 750 ms. —Occasionally, a smiley face was presented at the center of the visual field. —Subject was asked to press a key with the right index finder as soon as possible after the appearance of the face. —EEG data from a 60-channel electrode cap was acquired simultaneously with MEG data.

The sample dataset can be downloaded with the following command:

mne.datasets.sample.data_path(verbose=True)

The trigger codes for each kind of stimulus are shown in the table below:

| Name | Trigger code | Content |

|---|---|---|

| LA | 1 | Response to left-ear auditory stimulus |

| RA | 2 | Response to right-ear auditory stimulus |

| LV | 3 | Response to left visual field stimulus |

| RV | 4 | Response to right visual field stimulus |

| smiley | 5 | Response to the smiley face |

| button | 32 | Response triggered by the button press |

For more information on processing examples of this dataset check this link

Importing data

import mne from mne.datasets import sample

data_path = sample.data_path() raw_fname = data_path + ‘/MEG/sample/sample_audvis_filt-0-40_raw.fif’ Event_fname = data_path + ‘/MEG/sample/sample_audvis_filt-0-40_raw-eve.fif’ raw = mne.io.read_raw_fif(raw_fname, preload=True) raw.set_eeg_reference() # set EEG average reference

EEG Montage

EEG Reference

EEG Preprocessing

Before starting to extract features from a signal, it’s important to remove the artifacts in order to clean the signals to enhance relevant information embedded in these signals. EEG signals are known to be very noisy, as they can be easilyh affected by the electrical activity of the body (EOG and EMG) and environmental artifacts (e.g. 50 or 60 Hz power line). The preprocessing step aims at increasing the SNR (Signal-to-Noise Ratio) of the signals.

— Bandpass 0.15 - 40 Hz — _Select the most important channels (manually removing the less important channels for now)

Epoching

ERP components are characterized by specific temporal variations according to the stimulus onset, therefore ERP-based BCIs exploit mostly the temporal information of a signal.

The ERP components can be quantified by averaging activity in the EEG time-locked to a specific event. The results in a waveform associated with the processing of that specific event. The ERPs found in such tasks have a characterisitic waveform with clearly identifiable components.

An epoech is a chunk of EEG recording in the time domain. Here we are using the stimulus-locked epoch, which means that we consider 0 as the onset of the stimulus and then we extract the first N milliseconds after that (e.g. if Nis 1500, we are sampling at 32 Hz and we have 100 stimuli, we have 100 epochs of 48 samples (1.5s * 32) for each electrode).

Here is an example of Ephochs in a real time application: insert gif

Defining Epochs and computing ERP for left auditory condition

reject = dict(eeg=180e-6, eog=150e-6) event_id, tmin, tmax = {‘left/auditory’: 1}, -0.2, 0.5 events = mne.read_events(event_fname) epochs_params = dict(events=events, event_id=event_id, tmin=tmin, tmax=tmax,reject=reject)

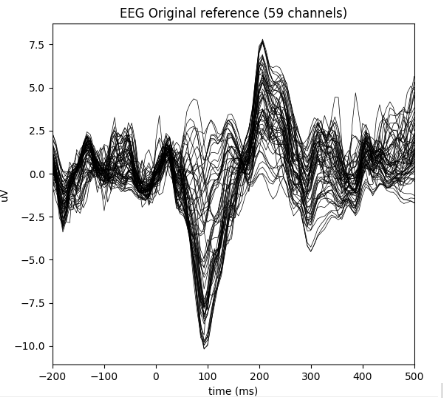

Extracting features

Since a lot of modern-day ERP processing involves machine learning, the term ‘feature’ is frequently used in ERP analysis. A feature is a measured property of a signal that is used as input to the machine learning algorithm. For example, a commonly used feature for ERPs is average voltage of the signal at each time point across all the epochs. Extracting this feature does not require major computation or processing, and it is easy to implement in MNE:

evoked_no_ref = mne.Epochs(raw_no_ref, **epochs_params).average() del raw_no_ref # save memory

title = ‘EEG with Original reference’ evoked_no_ref.plot(titles=dict(eeg=title)) del raw_no_ref # save memory

Once we have a feature vector, this could be used to in the classification step of the BCI to produce meaningful outputs using machine learning (e.g. Simple Linear Regression, Linear Discriminant Analysis, Support Vector Regression).

Other methods for feature extraction

Dimension Reduction methods

Brain signals can be measured through multiple channels. Not all information provided by these channels is generally relevant for understanding the underlying phenomena of interest. Dimension reduction technicques can be applied to reduce the dimension of the original data, removing the irrelevant and redundant information. So the computational costs are reduced.

Principal Component Analysis (PCA)

PCA is a statistical features extraction method that uses a linear transformation to convert a set of observations possible correlated into a set of uncorrelated variables called principal components. Linear transformations generates a set of components from the input data, sorted according to their variance in such a way that the first principal component has the highest possible variance. This variance allows PCA to separate the brain signal into different components. PCA is also a procedure to reduce the dimension of the features. Since the number of columns is less than the dimension of the input data. This decrease in dimensionality can reduce the complexity of the subsequent classifying step in a BCI system.

Independent Component Analysis (ICA)

ICA is a statistical procedure that splits a set of mixed signals to its sources with no previous information on the nature of the signal. The only assumption involved in the ICA is that the unknown underlying sources are mutually independent in statistical terms. ICA assumes that the observed EEG signal is a mixture of several independent source signals coming from multiple cognitive activities or artifacts.

Wavelet Transform

Wavelets are functions of varying frequency and limited duration that allow simultaneous analysis of the signal in both the time and frequency domain, in contrast to other modalities of the signal analysis such as Fourier Transform (FT) that provides only an analysis of the signal activity in the frequency domain. FT gives information about the frequency content, but it is not accompanied by information on when those frequencies occur.

Further readings

If you are interested in broadening your knowledge on ERPs and BCIs, we recommend the following papers/books to read. Most of them are freely available. We also indicated in bold face the main topic covered by the resource.

General on ERPs

- Luck, Steven J. An Introduction to the Event-Related Potential Technique. 2nd ed. MIT Press. The big book of ERPs: Official websiteand… PDF

- Luck, Steven J. 2004. “Ten Simple Rules for Designing and Interpreting ERP Experiments.” In Event-Related Potentials: A Methods Handbook edited by T.C. Handy. Good tutorial for designing ERP experiments, but also useful to us

- Jung, Tzyy Ping, Scott Makeig, Marissa Westerfield, Jeanne Townsend, Eric Courchesne, and Terrence J. Sejnowski. 2001. “Analysis and Visualization of Single-Trial Event-Related Potentials” Human Brain Mapping 14 (3): 166-85. doi:10.1002/hbm.1050. Another tutorial

- Comerchero, Marco D., and John Polich. 1999. “P3a and P3b from Typical Auditory and Visual Stimuli.” Clinical Neurophysiology 100 (1): 24-30

- Picton, Terrence w. 1992. “The P300 Wave of the Human Event-Related Potential.” Journal of Clinical Neurophysiology 9 (4): 456-79. http://journals.lww.com/clinicalneurophys/Abstract/1992/10000/The_P300_Wave_of_the_Human_Event_Related_Potential.2.aspx P300

- Polich, John. 2007. “Updating P300: An Integrative Theory of P3a and P3b.” Clinical Neurophysiology 118 (10): 2128–48. doi:10.1016/j.clinph.2007.04.019. P300

- Pritchard, Walter S. 1981. “Psychophysiology of P300.” Psychological Bulletin 89 (3): 506–40. http://www.ncbi.nlm.nih.gov/pubmed/7255627. P300

- Rohrbaugh, John W., Emanuel Donchin, and Charles W. Eriksen. 1974. “Decision Making and the P300 Component of the Cortical Evoked Response.” Perception & Psychophysics 15 (2): 368–74. http://link.springer.com/article/10.3758/BF03213960. P300 and decision making.

- Snyder, Elaine, and Steven A. Hillyard. 1976. “Long-Latency Evoked Potentials to Irrelevant, Deviant Stimuli.” Behavioral Biology 16 (3): 319–31. doi:10.1016/S0091-6773(76)91447-4. P300 and N200

- Squires, Nancy K., Kenneth C. Squires, and Steven A. Hillyard. 1975. “Two Varieties of Long-Latency Waves Evoked by Unpredictable Auditory Stimuli in Man.” Electroencephalography and Clinical Neurophysiology 38 (4): 387–401. Visual and auditory P300

- Falkenstein, Michael, Jörg Hoormann, Stefan Christ, and Joachim Hohnsbein. 2000. “ERP Components on Reaction Errors and Their Functional Significance: A Tutorial.” Biological Psychology 51 (2–3): 87–107. doi:10.1016/S0301-0511(99)00031-9. This is on Error Negativity, another ERP

- Yasuda, Asako, Atsushi Sato, Kaori Miyawaki, Hiroaki Kumano, and Tomifusa Kuboki. 2004. “Error-Related Negativity Reflects Detection of Negative Reward Prediction Error.” Neuroreport 15 (16): 2561–65. doi:10.1097/00001756-200411150-00027. Error negativity

- Hillyard, Steven A., and Lourdes Anllo-Vento. 1998. “Event-Related Brain Potentials in the Study of Visual Selective Attention.” Proceedings of the National Academy of Sciences 95 (3): 781–87. Various visual ERPs

- Hsu, Yi Fang, Jarmo A. Hämäläinen, Karolina Moutsopoulou, and Florian Waszak. 2015. “Auditory Event-Related Potentials over Medial Frontal Electrodes Express Both Negative and Positive Prediction Errors.” Biological Psychology 106. Elsevier B.V.: 61–67. doi:10.1016/j.biopsycho.2015.02.001. Error negativity but elicited by auditory stimuli

- Polich, John. 2007. “Updating P300: An Integrative Theory of P3a and P3b.” Clinical Neurophysiology 118 (10): 2128–48. Link P300

2. Feature extraction

- Donchin, Emanuel, and E. F. Heffley. 1978. “Multivariate Analysis of Event-Related Potential Data: A Tutorial Review.” In Multidisciplinary Perspectives in Event-Related Brain Potential Research, edited by Dave Otto, 555–72. Washington, D.C.: U.S. Government Printing Office. Various analysis techniques for ERP-based BCIs

- Poli, Riccardo, Caterina Cinel, Luca Citi, and Francisco Sepulveda. 2010. “Reaction-Time Binning: A Simple Method for Increasing the Resolving Power of ERP Averages.” Psychophysiology 47 (3): 467–85. doi:10.1111/j.1469-8986.2009.00959.x. Paper about the limits of averaging and how they can alter the shape of ERPs

- Poli, Riccardo, Luca Citi, Mathew Salvaris, Caterina Cinel, and Francisco Sepulveda. 2010. “Eigenbrains: The Free Vibrational Modes of the Brain as a New Representation for EEG.” In 32nd Annual International Conference of the IEEE EMBS, 6011–14. doi:10.1109/IEMBS.2010.5627593. A novel feature extraction method for ERPs.

- Congedo, Marco, Alexandre Barachant, and Anton Andreev. 2013. “A New Generation of Brain-Computer Interface Based on Riemannian Geometry.” arXiv Preprint arXiv:1310.8115 33 (0). http://arxiv.org/abs/1310.8115. Riemannian geometry as a feature extraction method

- Al-ani, Tarik, and Dalila Trad. Signal processing and classification approaches for brain-computer interface. INTECH Open Access Publisher, 2010. link

3. ERP-based BCIs

- Farwell, Lawrence A., and Emanuel Donchin. 1988. “Talking off the Top of Your Head: Toward a Mental Prosthesis Utilizing Event-Related Brain Potentials.” Electroencephalography and Clinical Neurophysiology 70 (6): 510–23. doi:http://dx.doi.org/10.1016/0013-4694(88)90149-6. The first P300 speller

- Fazel-Rezai, Reza, Brendan Z. Allison, Christoph Guger, Eric W. Sellers, Sonja C. Kleih, and Andrea Kübler. 2012. “P300 Brain Computer Interface: Current Challenges and Emerging Trends.” Frontiers in Neuroengineering 5: 14. doi:10.3389/fneng.2012.00014. A survey on P300-based BCIs, with a table similar to what I had in mind

- Citi, Luca, Riccardo Poli, Caterina Cinel, and Francisco Sepulveda. 2008. “P300-Based BCI Mouse with Genetically-Optimized Analogue Control.” IEEE Transactions on Neural Systems and Rehabilitation Engineering 16 (1): 51–61. doi:10.1109/TNSRE.2007.913184. P300-based BCI mouse

- Donchin, Emanuel, and Yael Arbel. 2009. “P300 Based Brain Computer Interfaces: A Progress Report.” In Proceedings of the 5th International Conference on Foundations of Augmented Cognition, 724–31. Springer Berlin Heidelberg. doi:10.1007/978-3-642-02812-0_82. Another survey on P300-based BCIs

- Matran-Fernandez, Ana, and Riccardo Poli. 2016. “Brain-Computer Interfaces for Detection and Localisation of Targets in Aerial Images.” IEEE Transactions on Biomedical Engineering PP (99): 1–1. doi:10.1109/TBME.2016.2583200. N2pc used to detect targets in rapid serial visual presentation

- Furdea, Adrian, Sebastian Halder, D. J. Krusienski, Donald Bross, Femke Nijboer, Niels Birbaumer, and Andrea Kübler. 2009. “An Auditory Oddball (P300) Spelling System for Brain-Computer Interfaces.” Psychophysiology 46 (3): 617–25. doi:10.1111/j.1469-8986.2008.00783.x. Auditory P300 BCI speller.

- .

- Furdea, Adrian, Sebastian Halder, D. J. Krusienski, Donald Bross, Femke Nijboer, Niels Birbaumer, and Andrea Kübler. 2009. “An Auditory Oddball (P300) Spelling System for Brain-Computer Interfaces.” Psychophysiology 46 (3): 617–25. doi:10.1111/j.1469-8986.2008.00783.x. Auditory P300 BCI speller.

- Nicolas-Alonso, Luis Fernando, and Jaime Gomez-Gil. 2012. “Brain Computer Interfaces, a Review.” Sensors 12 (2): 1211–79. doi:10.3390/s120201211. Link General review on BCIs

- Rebsamen, Brice, Cuntai Guan, Haihong Zhang, Chuanchu Wang, Cheeleong Teo, Marcelo H. Ang, and Etienne Burdet. 2010. “A brain controlled wheelchair to navigate in familiar environments.” IEEE Transactions on Neural Systems and Rehabilitation Engineering 18 (6): 590-598. Link

- Szafir, D. 2010. “Non-Invasive BCI through EEG.” unpublished Undergraduate Honors Thesis in Computer Science, Boston College. Link

- Lotte, F., 2008. Study of electroencephalographic signal processing and classification techniques towards the use of brain-computer interfaces in virtual reality applications (Doctoral dissertation, INSA de Rennes). Link

- Fazel-Rezai, Reza, Brendan Z. Allison, Christoph Guger, Eric W. Sellers, Sonja C. Kleih, and Andrea Kübler. 2012. “P300 brain computer interface: current challenges and emerging trends.” Frontiers in neuroengineering 5 (14). Link Another survey on P300-based BCIs

- van Dinteren, Rik, Martijn Arns, Marijtje LA Jongsma, and Roy PC Kessels. 2014. “P300 development across the lifespan: a systematic review and meta-analysis.” PloS one 9 (2): e87347. Link

- Hoffmann, Ulrich, Jean-Marc Vesin, Touradj Ebrahimi, and Karin Diserens. 2008. “An efficient P300-based brain–computer interface for disabled subjects.” Journal of Neuroscience methods 167 (1): 115-125. Link